A popular way to address this problem is the application of SLAM (Simultaneous Localisation and Mapping), a combination of algorithm and hardware that facilitates the recognition of a user’s environment in real-time.

But SLAM implementation takes a lot of computational power and often specialised equipment, such as depth cameras. The team wanted the resource to be able to be experienced by ordinary local people using just their mobile phones. They decided upon a solution that utilised the existing forms of tech currently offered within such mobile devices.

It’s fair to say that each technological feature provided its own form of challenge. GPS (Global Positioning System – a commonly implemented satellite-based positioning technology) was used as a kind of ‘blunt tool’ to get the user located approximately within both the real and virtual worlds.

GPS for standard smartphones is only accurate to approx. 5 – 8.5m in good conditions. In an urban environment, particularly when the scene is being viewed from pavement level, tall buildings may be close to the user and lead to even worse performance. We solve this issue by defining specific viewpoints where the user should stand. The active viewpoint is chosen by selecting the viewpoint with the minimum Euclidian distance to the reported GPS location.

Project Research Paper

The idea then was to use the mobile device’s internal compass to further hone the positioning, but their tests concluded that the devices could not be trusted to be accurate enough for the task.

During testing, the reported angle was often found to be up to twenty or thirty degrees away from the true angle. Thus an accurate bearing could not be found. However, it gave an initial guess for the orientation, which roughly aligns the viewpoint direction with the Castlegate model so that relevant landmark buildings are in view.

Project Research Paper

There then follows a user-driven calibration, in which the user swipes and rotates the virtual overlay until it aligns with the real-world image beneath it. This is perhaps not the most satisfying solution – it would be nice if the whole thing just automatically lined-up; but I think we are starting to get an understanding of just how difficult a set of challenges this project presented to the technical team.

Diving back into the mobile technology, the user’s device gyroscope and accelerometer sensors are used to calculate roll and pitch on the fly, and thankfully, these turned out to be a reliable and accurate source of adjustment data. There is some deliciously detailed accounting in the White Paper of the algorithmic tweaks used to get these elements to play nicely together, but I think they are best left to people more specialised than myself (Kalman filter? Euler integrator, anyone?).

The system also provides calculations for accurate positioning of the sun, so that realistic shadow and shading could be drawn, further enhancing the integration of the virtual elements into the real-world scene.

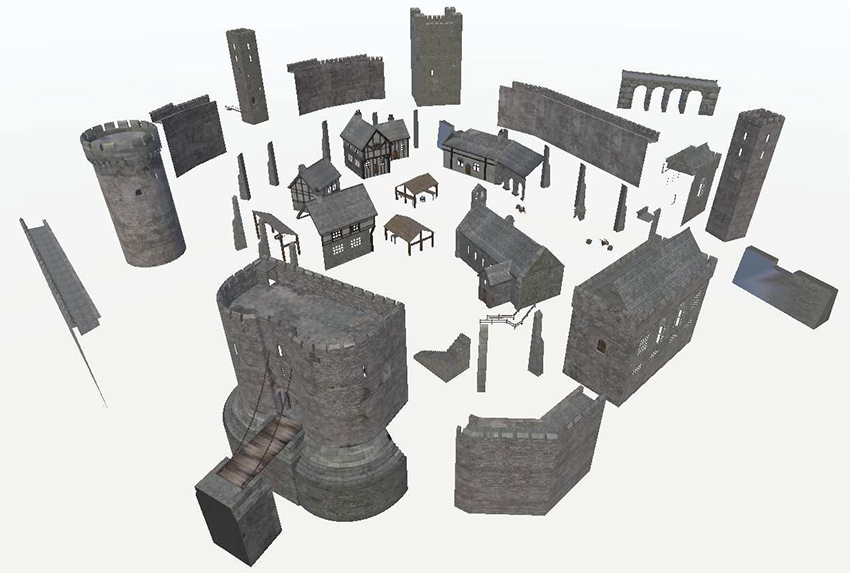

All-in-all the 3D model of the castle itself contains around 31,000 polygons and 69 textures. The model of the surrounding area contains around 55,000 polygons. These are fairly modest figures for a model of this scale, and again represent an effort to work within the technological bandwidth and constraints of a mobile-device-delivered end user experience.

Like many interactive 3D environments, the project was built in Unity, the popular development platform that started out as a game engine. Some of the surrounding area modelling was developed using SketchUp. Modelling also took place using Cinema 4D.

According to the Experience Castlegate site, where you can try out a version of the model for yourself (tip: use the VR version, even on desktop), the project was always about using local history in a very specific way to help drive the regeneration efforts:

The aim was to use Castlegate as a testbed to demonstrate how immersive digital technologies can harness cultural heritage and community engagement in the field of urban regeneration. Virtual and on-site digital experiences can be used to promote conversations with people about the future of the site by engaging them in its history, revealing its current potential and raising aspirations for a locally relevant and vibrant future.

Experience Castlegate

There’s an informative video by the university about the wider project (which does indeed include some archaeological work of the traditional kind). It features the interactive model towards its end (at 03:11) :

One of the future ambitions of this project is to integrate regeneration proposals into the 3D model itself, so that local people can experience them and offer feedback on their suitability. That would represent an impressive example of a project coming full circle and incorporating both past, present and future versions of reality.

Recreating Sheffield’s medieval castle in situ using outdoor augmented reality – project research paper